Low Latency FPV Streaming with the Raspberry Pi

2015-11-07

One of the biggest challenges in running my local quadcopter racing group has been overlapping video channels. The manual for the transmitters list a few dozen channels, but in practice, only four or five can be used at the same time without interference.

The transmitters being used right now are analog. Digital video streams could make much more efficient use of the spectrum, but this can introduce latency. Of late, I've been noticing a few posts around /r/raspberry_pi about how to do an FPV stream with an RPi, and I've been doing some experiments along these lines, so I thought it was a good time to share my progress.

It's tempting to jump right into HD resolutions. Forget about it; it's too many pixels. Fortunately, since we're comparing to analog FPV gear, we don't need that many pixels to be competitive. The Fatshark Dominator V3s are only 800x600, and that's a $600 set of goggles.

You'll want to disable wireless power management. This will tend to take the wireless interface up and down a lot, introducing a delay each time. It's not saving you that much power; consider that an RPi takes maybe 5 watts, while on a 250-sized quadcopter, the motors can easily take 100 watts or more each. So shut that off by adding this anywhere in /etc/network/interfaces:

wireless-power off

And reboot. That should take care of that. Check the output of iwconfig to be sure. You should see a line that says "Power Management:off".

You'll want to install GStreamer 1.0 with the rpicamsrc plugin. This lets you take the images directly off the RPi camera module, without having to use shell pipes to have raspivid to go into GStreamer, which would introduce extra lag.

With GStreamer and its myriads of plugins installed, you can start this up on the machine that will show the video:

gst-launch-1.0 udpsrc port=5000 \

! gdpdepay \

! rtph264depay \

! avdec_h264 \

! videoconvert \

! autovideosink sync=false

This will listen on UDP port 5000, waiting for an RTSP h.264 stream to come in, and then automatically display it by whatever means works for your system.

Now start this on the RPi:

gst-launch-1.0 rpicamsrc bitrate=1000000 \

! 'video/x-h264,width=640,height=480' \

! h264parse \

! queue \

! rtph264pay config-interval=1 pt=96 \

! gdppay \

! udpsink host=[INSERT_IP_ADDR] port=5000

Modify that last line to have the IP address of the machine that's set to display the stream. This starts grabbing 640x480 frames off the camera with h.264 encoding, wraps them up in the RTSP protocol, and sends them out.

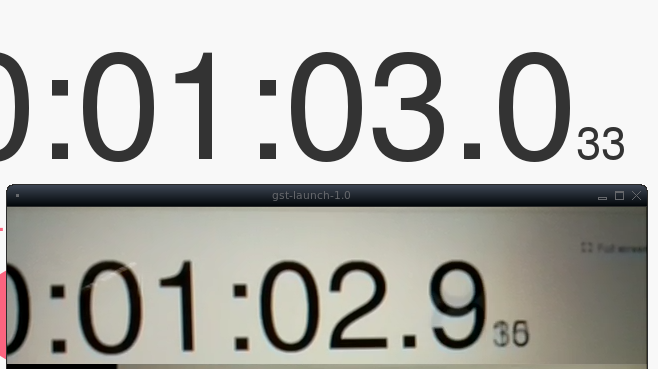

On a wireless network with decent signal and OK ping times (80ms average over 100 pings), I measured about 100ms of video lag. I measured that by displaying a stop watch on my screen, and then pointing the camera at that and taking a screenshot:

This was using a RPi Model 2, decoding on a fairly modest AMD A8-6410 laptop.

I'd like to tweak that down to the 50-75ms range. If you're willing to drop some security, you could probably bring down the lag a bit by using an open WiFi network.

I'll be putting together some estimates of bandwidth usage in another post, but suffice it to say, a 640x480@30fps stream comes in under 2Mbps with decent quality. There will be some overhead on that for things like frame and protocol headers, but that suggests a 54Mbps wireless connection will take over 10 people no problem, and that's on just one WiFi channel.